US20030115552A1 - Method and system for automatic creation of multilingual immutable image files - Google Patents

Method and system for automatic creation of multilingual immutable image files Download PDFInfo

- Publication number

- US20030115552A1 US20030115552A1 US10/303,819 US30381902A US2003115552A1 US 20030115552 A1 US20030115552 A1 US 20030115552A1 US 30381902 A US30381902 A US 30381902A US 2003115552 A1 US2003115552 A1 US 2003115552A1

- Authority

- US

- United States

- Prior art keywords

- text

- language

- translation

- image

- immutable

- Prior art date

- Legal status (The legal status is an assumption and is not a legal conclusion. Google has not performed a legal analysis and makes no representation as to the accuracy of the status listed.)

- Abandoned

Links

Images

Classifications

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F40/00—Handling natural language data

- G06F40/40—Processing or translation of natural language

-

- G—PHYSICS

- G06—COMPUTING; CALCULATING OR COUNTING

- G06F—ELECTRIC DIGITAL DATA PROCESSING

- G06F9/00—Arrangements for program control, e.g. control units

- G06F9/06—Arrangements for program control, e.g. control units using stored programs, i.e. using an internal store of processing equipment to receive or retain programs

- G06F9/44—Arrangements for executing specific programs

- G06F9/451—Execution arrangements for user interfaces

- G06F9/454—Multi-language systems; Localisation; Internationalisation

Definitions

- the present invention generally relates generally to data processing systems, and, more particularly, to data processing systems for automatically generating multilingual immutable image files containing text.

- Data processing devices have become valuable assistants in a rapidly expanding number of fields, where access to, and/or processing of data is necessary.

- Applications for data processing devices range from office applications such as text processing, spreadsheet processing, and graphics applications to personal applications such as e-mail services, personal communication services, entertainment applications, banking applications, purchasing or sales applications, and information services.

- Data processing devices for executing such applications include any type of computing device, such as multipurpose data processing devices including desktop or personal computers, laptop, palmtop computing devices, personal digital assistants (PDAs), and mobile communication devices such as mobile telephones or mobile communicators.

- PDAs personal digital assistants

- a data processing device used to provide a service such as a server maintaining data which can be accessed through a communication network, may be located at a first location, while another data processing device for obtaining a service, such as a client device operated by a user for accessing and manipulating the data, may be located at a second location remote from the first location.

- Servers which provide services are frequently configured to be accessed by more than one client at a time.

- a server application may enable users at any location and from virtually any data processing device to access personal data maintained at a server device or a network of server devices. Accordingly, users of the client devices may be located in different geographic areas that support different languages, or may individually have different language preferences.

- the term “user” as used herein refers to a human user, software, hardware, or any other entity using the system.

- a user operating a client device may connect to the server device or network of server devices using personal identification information to access data maintained at the server device or network of server devices, such as an e-mail application or a text document.

- the server in turn may provide information to be displayed at the client device, enabling the user to access and/or control applications and/or data at the server.

- This may include the display of a desktop screen, including menu information, or any other kind of information allowing selection and control of applications and data at the server.

- At least some portions of this information may be displayed at the client device using a screen object whose visual elements are stored in an immutable image file containing text information.

- a “screen object” is an entity that is displayed on a display screen. Examples of screen objects include screen buttons and icons.

- An “immutable image file” is a file that is not character based, but rather bit based. Thus, editing such files to change any text therein is very difficult. Examples of such files include files created in formats such as .gif, bitmap (.bmp), .tiff, .jpg, .png, .xpm, .tga, .mpeg, .ps, .pdf, .pcx, and other graphic files.

- This text information may provide information on a specific function which may be activated by selecting the screen object (e.g., by clicking on a button using a mouse and/or cursor device).

- the formatting of immutable image files such as files in .gif format does not generally support extraction of text strings (e.g., in ASCII format) which may be perceived by viewing a screen display of a rendering of the image file, as the immutable image files (e.g., bitmap (.bmp), .tiff, .jpg, .png, .xpm, .tga, .mpeg, .ps, .pdf, .pcx, and other graphic files) are generally created based on graphics information instead of text string information (i.e., in bitmap format instead of ASCII, or character-based format).

- text information of the images is preferably to be provided in respective languages understood by each individual user. This, however, would require that the text information of the images be provided in different languages.

- the text strings in the images may be translated into different languages. While this does not pose a problem for a small number of images, where manual translation and creation of the immutable images is possible by manually recreating the immutable image for each different language, a manual translation is neither feasible nor cost effective when a large number of immutable images must be provided in different languages.

- an application provided by a server or network of servers such as an office application may include a very large number of different menus in different layers including hundreds of individual menu or immutable images containing text. If the service is available in a large number of different geographic areas supporting different languages, a very large number of translation operations and image creation operations is necessary. For example, if an application provides 100 different immutable images (e.g., .gif files or bitmap files) containing text, and if a translation into 20 different languages is necessary, a total of 2000 translation and image creation operations may be necessary.

- immutable images e.g., .gif files or bitmap files

- Methods and systems consistent with the present invention provide an improved translation system that allows users to view screen objects of a user interface in different languages even though the screen object's visual elements are stored in an immutable image file (e.g., a file having a graphic format such as .gif, bitmap (.bmp), .tiff, .jpg, .png, .xpm, .tga, .mpeg, .ps, .pdf, or .pcx).

- an immutable image file e.g., a file having a graphic format such as .gif, bitmap (.bmp), .tiff, .jpg, .png, .xpm, .tga, .mpeg, .ps, .pdf, or .pcx.

- an immutable image file e.g., a file having a graphic format such as .gif, bitmap (.bmp), .tiff, .jpg, .png

- the textual elements of the screen objects are associated with the image files as text strings.

- the improved translation system automatically translates the text strings into different languages and generates image files that contain the translated textual elements.

- these image files are made available so that when a user wishes to display a user interface, it will be displayed in the language of their choice. For example, a browser user may select a language of their choice and receive web pages from a web server in this language.

- Such functionality facilitates the international use of web sites.

- a method in a data processing system for localizing an immutable image file containing text in a first language is provided.

- the method translates the text from the first language into a second language that is different from the first language, and automatically generates a translated immutable image file containing the text in the second language.

- a computer-readable medium is provided.

- This computer-readable medium is encoded with instructions that cause a data processing system for localizing an immutable image file containing text in a first language to perform a method.

- the method translates the text from the first language into a second language that is different from the first language, and automatically generates a translated immutable image file containing the text in the second language.

- a data processing system for localizing an immutable image file containing text in a first language.

- the data processing system comprises an immutable image file creation system that translates the text from the first language into a second language that is different from the first language, and automatically generates a translated immutable image file containing the text in the second language; and a processor for running the immutable image file creation system.

- FIGS. 1 a - 1 b depict block diagrams of an exemplary data processing system suitable for using immutable image files in a number of languages.

- FIG. 2 depicts a block diagram of a data processing system for automatically generating localized immutable image files containing text, the system suitable for practicing methods and systems consistent with the present invention.

- FIG. 3 depicts a flowchart illustrating steps of a method for automatically generating localized immutable image files containing text in accordance with methods, systems, and articles of manufacture consistent with the present invention.

- FIG. 4 depicts a block diagram illustrating a logical flow of an exemplary system for automatically generating localized immutable image files containing text in accordance with methods, systems, and articles of manufacture consistent with the present invention.

- FIG. 5 depicts a block diagram illustrating a logical flow of an exemplary system for automatically generating localized immutable image files containing text in accordance with methods, systems, and articles of manufacture consistent with the present invention.

- FIG. 6 depicts a flowchart illustrating steps of an exemplary method for automatically generating immutable image files containing text in a number of languages in accordance with methods, systems, and articles of manufacture consistent with the present invention.

- FIG. 7 depicts a flowchart illustrating steps of an exemplary method for automatically creating immutable image files having text in a number of languages in accordance with methods, systems, and articles of manufacture consistent with the present invention.

- FIG. 8 depicts a flowchart illustrating steps of an exemplary method for automatically creating immutable image files in a number of languages in accordance with methods, systems, and articles of manufacture consistent with the present invention.

- FIGS. 9 a - 9 b depict flowcharts illustrating steps of an exemplary method for identifying text and translating the text.

- FIG. 10 depicts a flowchart illustrating steps of an exemplary method for identifying text and translating the text both in source code text strings and in software related text.

- FIGS. 11 a - 11 b depict flowcharts illustrating steps of an exemplary method for identifying text and translating the text both in source code text strings and in software related text.

- FIG. 12 depicts a flowchart illustrating steps of an exemplary method for identifying text element types and searching for matching strings in a database.

- FIG. 13 depicts a flowchart illustrating steps of an exemplary method for validating a translation element and storing the validated element in a database.

- FIG. 14 depicts an exemplary table in a pretranslation database.

- FIG. 15 a depicts an exemplary display for a user processing a translation of text using exact matching.

- FIG. 15 b depicts a flowchart illustrating exemplary method steps for a user, a computer, and a database for translating text using exact matching.

- FIG. 16 a depicts an exemplary display for a user processing the translation of text using fuzzy matching.

- FIG. 16 b depicts a flowchart which depicts exemplary method steps for a user, a computer, and a database for translating text using fuzzy matching.

- FIG. 17 depicts an exemplary system for translating text using a pretranslation database.

- FIG. 18 depicts an exemplary sentence based electronic translation dictionary.

- FIG. 19 depicts an exemplary networked system for use with an exemplary translation technique.

- Methods and systems consistent with the present invention provide an improved translation system that allows users to view screen objects of a user interface in different languages even though the screen object's visual elements are stored in an immutable image file (e.g., a file having a graphic format such as .gif, bitmap (.bmp), .tiff, .jpg, .png, .xpm, .tga, .mpeg, .ps, .pdf, or .pcx).

- an immutable image file e.g., a file having a graphic format such as .gif, bitmap (.bmp), .tiff, .jpg, .png, .xpm, .tga, .mpeg, .ps, .pdf, or .pcx.

- an immutable image file e.g., a file having a graphic format such as .gif, bitmap (.bmp), .tiff, .jpg, .png

- the textual elements of the screen objects are associated with the image files as text strings.

- the improved translation system automatically translates the text strings into different languages and generates image files that contain the translated textual elements.

- these image files are made available so that when a user wishes to display a user interface, it will be displayed in the language of their choice. For example, a browser user may select a language of their choice and receive web pages from a web server in this language.

- Such functionality facilitates the international use of web sites.

- FIG. 1 a depicts a block diagram of an exemplary data processing system including a client 102 , a client 104 and a server 100 , similar to a data processing for implementing StarOfficeTM running in Sun One Webtop, developed by Sun Microsystems, in which a number of client devices may access the server device 100 to use a service application such as a word processor, a spreadsheet application or a graphics application.

- Client 102 or client 104 may include a human user or may include a user agent.

- Clients 102 and 104 each include a browser 106 and 108 , respectively.

- Browsers 106 and 108 may be used for displaying data provided by the server 100 (e.g., HTML data or XML data).

- the data may include references to immutable image files containing text (e.g., .gif files or bitmap files) which may be downloaded to the client browsers 106 and 108 for display as images such as control buttons or icons.

- Clients 102 and 104 may communicate with server 100 through communication networks which include a large number of computers, such as local area networks or the Internet. Access to information located in either client 102 , client 104 , or server 100 may be obtained through wireless communication links or fixed wired communication links or any other communication means. Standard protocols for accessing and/or retrieving data files over a communication link, for example, over a communication network, may be employed, such as a HyperText Transfer Protocol (“HTTP”).

- HTTP HyperText Transfer Protocol

- FIG. 1 b depicts a block diagram of the exemplary data processing of FIG. 1 b , more particularly showing browser displays 120 and 130 on clients 102 and 104 , respectively, of immutable images 122 and 132 having corresponding text in different languages.

- Server 100 includes a secondary storage device 140 which includes a German image file 142 which is displayed as a button 122 on the browser display 120 of client 102 , and an English image file 144 which is displayed as a button 132 on the browser display 130 of client 104 .

- Each of client 102 and 104 may specify a language in order to be provided with image files in the appropriate language for display during execution of the same general application such as a word processing application which may be executed as a separate process for each individual user.

- each individual user may use the application and receive screen displays including text information in a preferred language of the individual user.

- FIG. 2 depicts a block diagram of a data processing system 200 suitable for practicing methods and systems consistent with the present invention, which provides immutable image files having text 232 and 234 in a number of languages.

- FIG. 2 particularly illustrates how immutable images containing text strings in different languages may be created at reduced complexity and costs.

- System 200 includes a server 201 for generating the immutable image files and a server 260 for translating strings in particular languages into different languages.

- Servers 201 and 260 communicate with each other via a connection 245 which may be a direct connection, a network connection such as a LAN connection or a WAN connection such as the Internet.

- Text strings in a first language are identified on the server 201 and are transferred to the server 260 by a translator module 218 on the server 201 as a file of text strings 280 for translation into at least one second language that is different from the first language.

- the text strings are transferred back to the server 201 for processing to generate immutable image files containing the text strings in different languages.

- the immutable image files containing the text strings may then be used in applications so that users may view screen objects on a display in their choice of different languages.

- Server 260 includes a CPU 262 , a secondary storage device 268 which includes a translation database 270 , a display 265 , an I/O device 264 , and a memory 266 , which communicate with each other via a bus 291 .

- Memory 266 embodies an operating system 295 and a translation module 278 which inputs text strings from the file of text strings 280 and translates the strings into different languages using the translation database 270 .

- One suitable translation process for use with methods and systems consistent with the present invention is described below. However, other processes may also be used.

- the translated text strings are then transferred back to server 201 via the connection 245 .

- the elements of servers 260 and 201 are all located on one device.

- Server 201 includes a CPU 202 , a secondary storage device 208 , a display 205 , an I/O device 204 , and a memory 206 , which communicate with each other via a bus 231 .

- Memory 206 embodies an operating system 250 , a translator module 218 , a parser 220 , and a script 222 which is associated with an image manipulation program 224 to input text information from a text string file 226 and a template 230 to generate immutable image files 232 and 234 , each of which contains text in a language typically different from the other, when the script 222 is initiated.

- the script 222 may be initiated by a call entered by a user using the operating system 250 , or the script 222 may be initiated by a call included in a batch file which is executed by a user or system command.

- a batch file of a number of calls to the script 222 may be stored in the secondary storage device 208 for access by a user or a system in order to generate a number of immutable image files in a number of different languages, and for a number of different base images (e.g., cancel button, system setting buttons).

- the parser 220 may be used to parse text information from a text element file 228 to create the text string file 226 .

- a “text element file” is a file which contains text elements. An exemplary text element file written in XML is shown below.

- a “text element” is a property or attribute associated with a text string, including the text string itself.

- text elements include the text string, a language of the text string, information regarding an associated immutable image file which includes the text string (e.g., display characteristics), and location information indicating a storage location for the associated immutable file.

- the parser 220 may also parse the text element file 228 to obtain text strings for the translator module 218 to transfer to the server 260 for translation. After translation, the translated strings may be transferred back to the translator module 218 to be merged back into the text element file 228 to enable multilingual applications by accessing the text elements in different languages.

- the translation database 270 may be used to store strings in different languages, as well as other information such as template information associated with the template 230 .

- template information associated with the template 230 .

- An example of a very simple database table containing template information is: Template File Name Width Height Color SysDef SysDef.gif 300 60 16 Cancel Cancel.gif 200 60 25

- information is provided for two immutable image files, one for a system user button and one for a cancel button.

- a base file name is provided for storing an immutable image file for each template.

- width, height, and color information of the buttons is provided. Additional information such as shape, a base language text string (e.g., “System Defaults” or “Cancel” if English is the base language for translating the strings for these buttons), placement of the string within the button, and information regarding a previously generated immutable image file are stored in additional columns (not shown).

- the information regarding a previously generated immutable image file may be used to generate an empty (not having text) immutable image file, so that a translated string may be merged into the file.

- a call to the script 222 may be generated by requesting template information in the translation database 270 , and may be included in the call to the script 222 in lieu of using the template file 230 .

- a specific example of a script, a call to the script, a text element file, and a text string file are shown below.

- An exemplary image manipulation program used for the example is GIMP (GNU Image Manipulation Program).

- GIMP GPU Image Manipulation Program

- a commercially available program may be used for translating the text strings, such as a translation program available from Trados. Such a program may be used by configuring code to obtain the strings for translation, provide the strings to the translation program, receive the translated strings, and add the translated strings into the text element file.

- the parser 220 may be configured to extract the text for translation from at least one image creation file including position information and text elements from a number of immutable image files containing text in the first language.

- the text elements may be extracted from an image creation file such as a HTML (Hyper Text Markup Language) file or an XML (Extensible Markup Language) file or any other image creation file suitable for interpretation at a data processing device (e.g., user 102 or 104 ) for display.

- the parser 220 may automatically extract text elements from the image creation file and collect the text elements in the text element file 228 for further processing.

- An image creation file may generally be used to create a screen display (e.g., at a client accessing a service application at a server or group of servers).

- the parser 220 may further merge the translated text elements in a second language into an image creation file for the second language.

- the text elements for the immutable image files in the second language may be provided in the image creation file for the second language, so that a client operated by a user who is fluent in the second language may be provided with a display screen including text elements in the second language and a display of immutable image files containing text in the second language.

- This example is particularly suitable for application in network environments such as the Internet.

- the original uses of the Internet were electronic mail (e-mail), file transfers (ftp or file transfer protocol), bulletin boards and newsgroups, and remote computer access (telnet).

- the World Wide Web (web), which enables simple and intuitive navigation of Internet sites through a graphical interface, expanded dramatically during the 1990s to become the most important component of the Internet.

- the web gives users access to a vast array of documents that are connected to each other by means of links, which are electronic connections that link related pieces of information in order to allow a user easy access to them.

- Hypertext allows the user to select a word from text and thereby access other documents that contain additional information pertaining to that word; hypermedia documents feature links to images, sounds, animations, and movies.

- the web operates within the Internet's basic client-server format.

- Servers include computer programs that may store and transmit documents (i.e., web pages) to other computers on the network when requested, while clients may include programs that request documents from a server as the user requests them.

- Browser software enables users to view the retrieved documents.

- a web page with its corresponding text and hyperlinks may be written in HTML or XML and is assigned an online address called a Uniform Resource Locator (URL).

- URL Uniform Resource Locator

- Information may be presented to a user through a graphical user interface called a web browser (e.g., browser 106 or 108 ).

- a web browser e.g., browser 106 or 108

- the web browser displays formatted text, pictures, sounds, videos, colors, and other data.

- HTML was originally used. HTML is a language whereby a file is created that has the necessary data and also information relating to the format of the data.

- XML has emerged as a next generation of markup languages.

- XML is a language similar to HTML, except that it also includes information (called metadata) relating to the type of data as well as the formatting for the data and the data itself.

- this example may be used to extract text elements from HTML or XML pages or other types of pages such as image creation files, in order to facilitate provision of immutable image files in different languages for internationalizing service applications accessible over the Internet or any other network.

- the text element file 228 may include any kind of collection of text elements stored in a memory area of the data processing system 200 , or stored at any other location.

- the text element file 228 may be provided at an external location, accessible from the data processing system 200 through a communication network.

- the text element file 228 may be a centralized resource accessible by a number of data processing systems such as the data processing system 200 from arbitrary locations. Accordingly, a decentralized generation of immutable image files in various languages may be possible, including a consistent use of translated text elements. This allows consistent presentation of textual information to users, in contrast to environments in which different translation operations yield different translations of text elements for use in the same or different service applications.

- Collecting text elements in the text element file 228 may be facilitated by a check-in process, allowing registration of text elements and translations thereof in the text element file 228 , in order to maintain information on languages in which the individual immutable image files are available.

- the text element file 228 may include an XML file and an XML editor may be used for generating the translated text elements. Further, the text element file 228 may include information of a desired output file name of the respective immutable image files and information regarding a template, such as template 230 , which may be needed for creation of an immutable image file.

- the text element file 228 may also serve as a basis of information enabling proper handling of the created immutable image files and enabling selection of a suitable template for the text elements which are collected.

- different types of text elements may be associated with different templates, enabling creation of immutable image files in certain categories (e.g., characterized by size, color or any other graphic information).

- immutable image files related to data handling may be generated using a first template

- immutable image files enabling a setting of references e.g., for display

- the translation module 278 for obtaining translated text elements in at least one second language may include a program or a sequence of instructions (as described below) to be executed by the CPU 202 , by corresponding hardware or a combination of hardware and software for realizing the functionality of obtaining translated text elements in the second language.

- the translation of the text elements may be accomplished by using pretranslation databases.

- the translator module 218 may be configured to extract relevant information from the text element file 228 or may be configured to import this information into a database such as the translation database 270 for improved handling of the text elements. After identifying individual text elements, the translator module 218 may invoke a translation service providing a translation of the text element into the second language.

- the translation service may include an internal application, such as a thesaurus provided in a memory such as memory 206 , within or accessible from the data processing system 201 . Further, the translation service may be an external translation service provided by an external application program (e.g., offered by a third party). For example, the translator module 218 may be configured to invoke a web-based translation tool, accessed through a computer network such as a local area network or the Internet, or a combination thereof.

- the translated image text elements may be collected in the translation database 270 and/or may then be merged into the text element file 228 .

- a program may be provided having instructions configured to cause a data processing device to carry out the method of at least one of the above operations.

- a computer readable medium may be provided, in which a program is embodied, where the program is to make a computer execute steps of the method discussed above.

- a computer-readable medium may be provided having a program embodied thereon, where the program is to make a computer or a system of data processing devices to execute functions or operations of the features and elements of the examples described previously.

- a computer-readable medium may be a magnetic or optical or other tangible medium on which a program is recorded, but may also be a signal, e.g., analog or digital, electronic, magnetic or optical, in which the program is embodied for transmission.

- a computer program product may be provided comprising the computer-readable medium.

- the text string file 226 may include information of a desired output file name of the respective immutable image files and information regarding a template, such as the template 230 , used for creation of an immutable image file.

- a template 230 may include any kind of image file information.

- a template may include information related to at least one of image size; image shape; color; and position of a text element in an image.

- a number of templates for various images may be provided and stored in the memory 206 or at an external location.

- the template information may be included in the call to the script 222 .

- the text string file 226 may also serve as a basis of information enabling proper handling of the created images and enabling selection of a suitable template for the text elements collected.

- different types of text elements may be associated with different templates, allowing creation of images in various categories such as size, color or any other type of graphic information.

- images related to data handling may be generated using a first template, whereas images enabling a setting of references such as for display may be generated using a second template.

- the image size may specify an area of the image such as an area in a display screen to be displayed at a client unit. Further, the image size may specify a minimum size of the image, such as a size suitable to fit the text element.

- the image shape information may specify any graphic parameters for obtaining certain graphic effects such as shading or 3-D effects. Further, the shape may also specify geometric shapes such as rectangles, circles and polygons.

- the image color may include information related to shading of the image to obtain further graphic effects.

- the image information may further include information regarding a position of a text element, for an image enabling a proper placement of the text element within the image and/or may relate to a position of the image in a display screen, as specified in an image creation file (e.g., an HTML file), enabling proper placement of the image such as in a menu including different images to be displayed at a client device.

- an image creation file e.g., an HTML file

- the system of FIG. 2 facilitates a generation of immutable images for screen displays similar to those discussed with regard to FIG. 1, wherein the images have text elements with different languages, in order to adapt a service application to different languages of different users.

- a first user who is fluent in a first language may be provided with display screens displaying screen objects with text information in the first language

- a second user who prefers a second language may be provided with a display screen displaying screen objects with text elements in the second language.

- the data processing system 200 may generally include any computing device or group of computing devices connected with one another.

- the data processing system 200 may be a general purpose computer such as a desktop computer, a laptop computer, a workstation or combinations thereof.

- the functionality of the data processing system 200 may be realized by distributed data processing devices connected in a local area network such as a company-wide computer network or may be connected by a wide area network such as the internet, or combinations thereof.

- the image manipulation program 224 may be a graphics application provided as a stand alone program, e.g., offered by a third party. Further, the image manipulation program 224 may be a commercially available graphics application enabling generation of immutable image files based on given text elements and given template information. For example, the image manipulation program 224 may include the GNU (Gnu's Not UNIX) image manipulation program GIMP, which is described at www.gimp.org.

- GNU Gnu's Not UNIX

- the system 200 is configured to instruct the image manipulation program 224 to generate immutable image files based on given parameters and may be adapted to generate a script to instruct the image manipulation program 224 to generate the immutable image files based on the given parameters.

- a script such as script 222 may conveniently instruct the image manipulation program 224 based on the parameters.

- the script may be a sequence of instructions provided to the image manipulation program 224 , instructing the image manipulation program 224 to generate an immutable image file having certain properties and including a specific text element.

- the script 222 may include a text element for insertion into the immutable image file.

- the script may be written and intermediately stored in a file (e.g., as a batch file stored in secondary storage 208 ), to be provided to the image manipulation program 224 , and may include the parameters discussed above (i.e., parameters needed to generate the immutable image file).

- the parameters may include a template and the text element of the immutable image.

- the parameters may include immutable image file information as discussed above, including image size, shape, color and/or position of a text element within the image.

- the parameters may include a number of text elements of the image and at least one template, enabling a concurrent generation of various immutable image files with different text elements and/or templates.

- Each text element may be associated with a particular template (e.g., specified in the input file), or the text elements may be categorized in groups, each group associated with a particular template.

- the parser 220 may be configured to generate the text string file 226 including at least one text element (e.g., from the text element file 228 ) and the image manipulation program 224 may be instructed, using the script 222 , to access the text string file 226 in order to retrieve at least one text element of the text string file 226 .

- the text string file 226 may be intermediately stored in the memory 206 , or at any other location. Thus, the text string file 226 may be based on at least a portion of the text element file 228 .

- the text string file 226 may further include at least one of a language identifier; and a desired name of an immutable image file to be created.

- the language ID facilitates an easier classification of immutable image files created based on the text elements, and a desired name of an immutable image file to be created facilitates easy access to the generated graphic elements including the image files, such as by updated HTML code used to instruct the display on a display screen for display at a client unit.

- the image manipulation program 224 may access the text string file 226 , retrieve the text elements and generate the immutable image file based on the immutable image file information.

- the immutable image file information may be directly included in the text string file 226 .

- the script 222 and image manipulation program 224 may be configured to generate an empty immutable image file based on the template 230 and may be configured to merge the translated text element into the empty immutable image file.

- a collection of immutable image files with no text may be created, and text elements may be included on demand.

- This enables generation of a generic image creation file (e.g., an HTML file) for generating a screen display at a client unit.

- the text elements in the respective languages can then be included in the generic image creation file in order to generate image creation files in different languages.

- the image manipulation program 224 may generate immutable image files for storage at the data processing system 200 or at any other location.

- the immutable image files may also be directly included into image creation files for generating screen displays at client units or image creation files with references to the immutable image files stored may be created.

- Two exemplary immutable image files which may be created using system 200 are an immutable image file in a first language, English, displaying the English expression “system defaults”, and a second image button in the second language, German, which includes the corresponding German expression “Systemeinwolfen.”

- the buttons 122 and 132 of FIG. 1 b illustrate screen displays of the immutable image files including these text elements.

- FIG. 3 depicts a high-level flowchart illustrating steps of a method for automatically localizing and immutable image file containing text in a first language, the method suitable for use with methods, systems and articles of manufacture consistent with the present invention, such as the system of FIG. 2.

- a translation system such as the system shown on server 260 translates the text from the first language into a second language that is different from the first language (Step 300 ).

- script 222 and image manipulation program 224 automatically generate a translated immutable image file containing the text in the second language (Step 302 ).

- FIG. 4 depicts an exemplary high level logical flow using elements of a system for generating immutable image files containing specific exemplary text in different languages according to another example.

- FIG. 4 particularly illustrates extraction of text elements and merging of translated image text elements into image creation files such as HTML or XML files.

- screen displays may be generated for client devices accessing services at a server. As the client devices may be located in different geographic areas supporting different languages, the service provided at the server may be enabled to generate image creation files or screen displays for the client devices in different languages.

- FIG. 4 illustrates two screen displays based on image creation files, in different languages.

- a first screen display 410 includes English language buttons 411 and 412 .

- the displayed buttons in the present example are used as menu items allowing control of a corresponding service application at a server.

- the button 411 in the present example is assumed to relate to system settings and therefore shows the English expression “system settings.”

- the second image button 412 relates to user group functionality, and therefore displays the expression “user groups.”

- An image creation file corresponding to the screen display 410 may be used for users preferring the English language and may thus be provided from the server to corresponding client devices which have selected English as the preferred language.

- the second screen display 450 shown in FIG. 4 shows German language buttons and therefore is suitable for users in a region that supports the German language, or users who have selected German as their preferred language.

- the screen display 450 includes buttons 451 and 452 .

- the button 451 includes the German expression “Systemeinwolfen,” indicating that the corresponding button also relates to system functions.

- the button 452 corresponding to the button 412 contains the German expression “Benutzeropathy” and indicates that this button also relates to a user group functionality.

- the screen displays may include a larger number of immutable images and/or sub-menus or sub-screens, so that there may well be a very large number of different immutable image files to be considered.

- the exemplary text elements may be extracted from the screen display 410 corresponding to the English language and may be translated into text elements for the screen display 450 for the German language. This extraction and creation process may be carried out offline. Thus, immutable image files for screen displays in different languages may be created and stored beforehand and made accessible to a service application such as a text processing application.

- the extraction and creation process for providing immutable image files containing text in different languages may be provided on demand (e.g., if a user with a particular preferred language logs into the server).

- the screen display i.e., the image creation file

- the corresponding language including text elements and images in the preferred language may be created dynamically (i.e., on demand).

- an arrow 460 illustrates a corresponding extraction process enabling extraction of the text elements from the image buttons 411 and 412 and provision to the text element file 420 .

- the extraction process may be carried out as described previously.

- the text element 420 therefore will contain, in the present simplified example, the expression “system settings” and “user groups.” Then, in steps 461 and 462 translated text elements involving a translation service 430 are obtained.

- the text element file 420 may include corresponding language identifiers (i.e., showing that the original text elements are in the English language and that needed text elements should be in the German language).

- the text element file 420 will further include the German expressions “Systemeinwolfen” and “Benutzer psychology.” Thereafter, as illustrated by arrow 463 , a graphic program 440 is instructed to generate immutable image files in the German language, corresponding to the buttons 411 and 412 in the English language. The creation of the immutable image files was described previously.

- immutable image files corresponding to the buttons 451 and 452 are generated.

- the immutable image files may be checked into a database of immutable image files or may be stored intermediately in any other way. Thereafter, as outlined by an arrow 464 , the image screen 450 for display at a client device or generation of a corresponding image creation file is illustrated.

- an English language user accessing a particular service controlled by the screen displays 410 or 450 at the client units may be provided with the English language screen display 410

- a German language user may be provided with the German language screen display 450 .

- FIG. 5 depicts an exemplary logical flow of elements of a system for obtaining and using immutable image files in a number of different languages according to another example.

- FIG. 5 particularly shows how the immutable image files in different languages may be used to supply users with display screens in different languages.

- FIG. 5 shows a data processing device 500 for obtaining image buttons in a number of different languages.

- the data processing device 500 may include a single processing device or a number of processing devices in communication with one another.

- the data processing device 500 includes a text element file 501 (e.g., as described previously).

- the text element file 501 may be stored in an internal memory or may be provided at an external memory location.

- the data processing device 500 includes an input file 502 .

- the input file 502 may include at least one text element, and may further include a language ID and/or name of an immutable image file to create.

- the input file may also be stored at an internal location or at any other external memory location.

- the data processing device 500 also includes a template 503 including immutable image file information such as image size and/or image shape and/or color and/or a position of a text element within the image.

- the data processing device 500 may store a number of templates at an internal memory location or an external memory accessible from the data processing device 500 .

- a suitable template e.g., the template 503

- the template 503 may be selected based on information contained in the text element file 501 or on information on image parameters obtained from another source. Further, the template 503 may be dynamically generated.

- the data processing device 500 includes a script 504 , as described previously.

- the script 504 may be generated based on the input file 502 , and may include information of the input file 502 or a reference or storage location enabling access to the input file. Further, the script 504 may include image information from the template 503 .

- the script 504 may be intermediately stored at the data processing device 500 or may be dynamically generated and transferred to the graphics application program 505 .

- the graphics application program 505 may be a graphics application (e.g., GIMP) as described previously, provided at the data processing device, as shown in FIG. 5, or may be provided at an external location, arranged to be accessed from the data processing device 500 through a communication link.

- the graphics application program 505 is instructed to generate at least one immutable image file, as described previously.

- the immutable image files with text elements in different languages, as illustrated by reference numeral 506 may be stored in the data processing device 500 , or may be stored at an external location.

- the data processing device 500 invokes a translation service 510 in order to obtain translated text elements.

- the translation may be performed by a translation system as described below in more detail.

- a server 520 which provides services to a number of users 541 and 542 . The services enable users to access and/or manipulate data at the server 520 from remote locations through client devices.

- the server 520 may include a large computing device providing services such as office applications, communications applications or any other applications.

- Exemplary clients 541 and 542 are arranged to access the server 520 through the Internet, as illustrated at 530 , in order to control execution of a service application, as described previously.

- the application at the server 520 generates image creation files or screen displays for the clients 541 and 542 in different languages.

- FIG. 5 illustrates two exemplary screen displays 521 and 522 in a first and a second language.

- the server 520 provides image creation files to the clients 541 and 542 in the preferred language.

- the data processing device 500 may be configured to extract text elements (e.g., from an image creation file corresponding to screen display 521 at the server 520 ), including text elements in a first language TE 1 , TE 2 , TE 3 , and TE 4 .

- the text elements are stored in the text element file 501 .

- the data processing device 500 obtains translated text elements in the second language using the translation service 510 .

- the translated text elements are then merged into the text element file 501 .

- the input file 502 is generated and, based thereon and on the template 503 the script 504 is generated.

- the script 504 then instructs the graphics application program 505 to generate the immutable image files with text elements TE 1 , TE 2 , TE 3 , and TE 4 in the second language.

- the server 520 uses the immutable image files in the various languages to generate image creation files (e.g., in HTML or XML), such as image creation files corresponding to the screen display 521 and 522 .

- image creation files e.g., in HTML or XML

- FIG. 6 depicts a flowchart depicting steps of an exemplary method for generating screen objects (e.g., image buttons) in a number of languages. The steps of FIG. 6 may be carried out using the system shown in FIG. 2; however, FIG. 6 is not limited thereto.

- screen objects e.g., image buttons

- a parser generates an input file including at least one image text element, and/or a language ID and/or a name of an immutable image files to be created (Step 601 ).

- the input file may resemble the text string file 226 and may correspond to at least part of the text element file 228 .

- a parser obtains image information, (i.e., information on the image such as color, shape, dimensions, and position of a text string within the image) from a template (Step 602 ).

- the image information may relate to one or a number of templates (as required for the text elements). For example, image information for different groups of text elements and/or different languages may be obtained.

- Obtaining the image information may also include dynamically generating image information based on information from the input file (e.g., a size of a text element in a particular language).

- a script is initiated to instruct a graphic application program (e.g., the image manipulation program 224 which may be implemented using the GNU (Gnu's Not UNIX) image manipulation program GIMP) to generate at least one immutable image file, the script including information on the input file (e.g., a file name or address, and the at least one immutable image file) (Step 603 ).

- the script may be used to instruct a graphic application program on a remote server to create an immutable image file based on given parameters.

- the given parameters may be obtained from the input file and may be provided with the image information.

- the graphic application program may access or retrieve the input file from a memory including at least one of text elements, language IDs and immutable image file names. Further, the input file may also include the image information.

- the graphic application program generates at least one immutable image file based on the image information and the translated text element (Step 604 ). For example, a number of immutable image files for a particular text element in different languages may be created simultaneously (e.g., if the input file includes one text element in a number of languages). Further, a number of immutable image files may be created simultaneously for a number of text elements in one language. Moreover, a combination of both cases is possible, wherein a number of immutable image files for a number of text elements in a number of languages may be created.

- a number of templates may be employed to generate immutable image files for different groups of text elements.

- the text elements may be classified according to language ID or according to content, in order to be able to make characteristics or appearance of an immutable image file language dependent and/or content dependent.

- Different templates may be used for each classified group. For example, text elements for data manipulation may be classified into a first group for images having a first appearance, and text elements for setting preferences may be collected in a second group for images having a second appearance.

- the graphic application program then stores at least one immutable image file using the at least one immutable image file name (Step 605 ).

- the file names may enable a convenient identification of the immutable image files in later processing steps (e.g., during generation of image creation files, for example for clients fluent in different languages).

- the graphic application program may generate an empty immutable image file (i.e., an immutable image file free of a text element) based on the image button information (Step 606 ).

- immutable image files for receiving text elements may be created.

- the translated text elements may be merged into the respective empty immutable image files (Step 607 ).

- a library of immutable image files may be created and the text elements may be merged into the retrieved empty immutable image files.

- the graphic application program described previously includes the GNU (Gnu's Not UNIX) image manipulation program GIMP.

- GNU Gnu's Not UNIX

- creation of immutable image files 232 and 234 is accomplished by a GIMP call, a GIMP input file (e.g., text string file 226 ) and a GIMP script (e.g., script 222 ).

- GIMP call e.g., a command line of an operating system such as Windows or from a batch file by the operating system

- the GIMP call is initiated (e.g., on a command line of an operating system such as Windows or from a batch file by the operating system) and in turn instructs the GIMP to input text string information from the input file and template information from the call itself.

- FIG. 7 depicts a flowchart depicting steps of another exemplary method for generating immutable image files in different languages. The steps of FIG. 7 may be carried out using the system shown in FIG. 2; however, FIG. 7 is not limited thereto.

- a parser transfers text elements into an XML text file or a database (Step 701 ). Transferring the text elements may be effected by extracting text elements from image creation files or from screen displays including immutable image files (e.g., HTML or XML files for display at a client device). Transferring the text elements to an XML file or a database facilitates efficient handling of the text elements.

- the database may be provided internal to a data processing device such as the data processing system 200 shown in FIG. 2, or at any other location.

- the XML file including the text elements may be visualized for an operator using an XML editor.

- a web-based tool or an XML editor obtains translated image text elements (Step 702 ).

- the text elements may be automatically extracted from the XML text file or database and transferred to the translation service, which returns translated text elements as described previously.

- Commands for the translation service may include a desired language.

- the translation service may be a commercially available translation service or a translation service using an application at the data processing system 200 shown in FIG. 2 and as described below.

- the web-based tool or the XML editor merges the translated text elements received from the translation service back into the XML file or the data base (Step 703 ). Accordingly, a collection of different text elements, each of the text elements in different languages, may be provided in the XML file or database. Thereafter, the graphic application program (e.g., GIMP) as discussed above generates immutable image files based on the translated image text elements as described previously (Step 704 ).

- GIMP graphic application program

- FIG. 8 depicts a flowchart depicting exemplary options of steps for a method for generating immutable image files in a number of languages. The steps of FIG. 8 may be carried out using the system shown in FIG. 2; however, FIG. 8 is not limited thereto.

- text elements in a first language are input (Step 801 ).

- a user may generate the text elements manually.

- a parser may obtain an image creation file (e.g., an HTML or XML file) (Step 802 ).

- the parser extracts text elements in a first language from the image creation file (Step 803 ).

- the parser generates a text element file (e.g., text element file 228 as discussed above) with the text elements (Step 804 ).

- the parser transfers the text elements from the text element file into a database (Step 805 ).

- a translator module invokes a translation service (e.g., the system associated with server 260 ) to translate the text elements into a second language (Step 806 ).

- a translation service e.g., the system associated with server 260

- the translator module 218 invokes a translation service for translating the text elements in the first language into text elements in the second language (Step 806 a ).

- the translation module 278 then inserts the text elements in the second language into the database (Step 806 b ).

- An example of a technique for translation is given in more detail below.

- the translator module 218 obtains the translated text elements and merges the text elements in the second language into the text element file 228 as discussed above (Step 807 ).

- the text element file 228 now includes text elements in the first language and corresponding text elements in the second language.

- a parser then obtains display characteristics of the image (e.g., color, shape, dimensions, and position of text within image) from at least one template 230 as discussed above (Step 808 ).

- an operating system e.g., operating system 250

- obtains at least one image creation script e.g., script 222

- a user may create at least one immutable image file script 222 for immutable image files.

- the script may be generated automatically.

- a script may be generated for each individual text element in each particular language.

- a script for a number of text elements in one language may be generated or a script for one text element in a number of languages may be generated for use by the operating system in conjunction with an image manipulation program (e.g., image manipulation program 224 ).

- the script 222 instructs the image manipulation program 224 to generate at least one immutable image file 232 with a text element in the second language as discussed above (Step 810 ).

- the image manipulation program then stores the immutable image file in a location specified by desired location information stored in the text string file 226 as discussed above (Step 811 ).

- the immutable image file 232 may then be used to serve users with different language preferences.

- the image manipulation program 224 described previously includes the GNU (Gnu's Not UNIX) image manipulation program GIMP.

- GIMP call a GIMP input file

- GIMP script e.g., script 222

- Code examples of a GIMP call, a GIMP input file (file name: .utf8.txt) and a GIMP script are given below.

- the GIMP call is initiated (e.g., on a command line of an operating system such as Windows or from a batch file by the operating system) and in turn instructs the GIMP to input text string information from the input file and template information from the call itself.

- the exemplary GIMP input file shown below (which corresponds to the text string file 226 ) includes exemplary desired location information for storing generated immutable image files.

- the exemplary desired location information is designed so that each file generated for a particular template is assigned a file name with the same general base name, with a distinguishing prefix which includes a language code (e.g., “de” for German, “en_US” for U.S. English).

- the files are stored in a common directory.

- This naming and storage convention enables convenient update of image creation programs such as HTML or XML code by simply modifying the prefix of a referenced image file to agree with the language preference of a particular user to enable a browser of the user to access the immutable image files which have been generated for the user's preferred language.

- the XML file is an example of a file initially created by a developer when developing a system using immutable image files for display, in order to describe elements of the immutable image file such as text strings which may need to be translated for multilingual users.

- the XML file as shown has been processed by extracting the original German text strings, translating the strings into other languages, and then merging the translated strings back into the text element file as discussed above.

- the file may be used to generate a GIMP input file by extracting strings in different languages for insertion into the GIMP input file as discussed above.

- the discussion which follows relates to database application methods and programs for localizing software, translating texts contained therein and to support translators in localizing software and translating texts. It also relates to a translation system for localizing software, translating texts and supporting translators.

- Conventional translation programs attempt to translate texts on a “sentence by sentence” basis. Such translation programs encompass the analysis of the grammatical structure of a sentence to be translated and the transfer of it into a grammatical structure of a target language sentence, by searching for grammar patterns in the sentence to be translated.

- the text to be translated may also be called the source language text. Translation is usually accomplished by analyzing the syntax of the sentence by searching for main and subordinate clauses. For this purpose the individual words of the sentence need to be analyzed for their attributes (e.g., the part of speech, declination, plurality, and case). Further, conventional translation programs attempt to transform the grammatical form from the source language grammar to the target language grammar, to translate the individual words and insert them into the transformed grammatical form.

- the translated sentence exactly represents or matches the sentence to be translated.

- One difficulty is that the translation of many words in the text to be translated is equivocal and represents many different words in the target language. Therefore, as long as translation programs cannot use thesauruses specifically adapted for detecting the correct semantic expressions and meaning of sentences, according to the context, machine translations are imperfect.

- Another problem is connected with software localization (i.e., the adaptation of software to a certain local, geographic or linguistic environment in which it is used). Localization must be done with internationally available software tools in order to ensure proper functionality with users from different cultural and linguistic backgrounds. This problem is also related to the fact that different translators work simultaneously on different aspects of software. So, for example, one team translates the text entries in the source code of software, another team translates the manuals, while a third team translates the “Help” files. To achieve a correct localized version of the software it is beneficial that the three teams use the same translations for common words so that the manuals, “Help” files, and the text elements in the software itself consistently match each other. To achieve this, the teams conventionally have to establish the keywords and the respective translations used in the software. This is time consuming and requires good communication among the teams.

- An exemplary method described below localizes software by adapting the language of texts in source code of the software by extracting text elements from the source code.

- untranslated text elements are searched in the extracted text elements.

- Translation elements are retrieved for each untranslated text element (e.g., by searching a database with pre-stored matching source text elements and respective translations).

- the next step is related to associating the searched untranslated element of the source language with the retrieved translation elements, if such translation elements are found.

- the method supports a software localizer (or user) by offering one or more possible translations of a text element contained in the source code of a software program. In this way the user may simply perceive the translation proposals retrieved from a database.

- the method ensures the adaptability of different software to certain cultural, geographic or linguistic environments.

- the method further includes the steps of validating the retrieved translation elements, and merging the source code with the validated translation elements. With these steps the user can easily decide if he/she wants to accept (validate) or discard the suggested translation.

- the validation of the suggested translation may be executed automatically, if the user has validated the same translation element in another section of the source code.

- Merging the source code with the validated translation elements indicates the enlargement of the collection of text elements for one language in the source code with the validated other language(s) translation elements.

- the merging may be executed after translation of the whole text.

- the method further includes a compiling step to compile the merged source code to generate a localized software program in binary code.

- This software program preferably includes one or more text libraries in languages different from the language of the original source code. Compilation may be the last step in the generation of a localized software version, depending on the programming language.

- An additional step of binding may be executed when the programming language requires the binding of sub-modules or sub-libraries after compilation. In this case compilation is followed by one or more binding steps. Depending on the programming language, the compilation may include an assembling operation.

- the method includes an adaptation of the language of texts related to the software (e.g., manuals and Help files) for illustrating the use of the software and supporting the user accordingly.

- Software is often provided with additional texts such as “Help ” files, manuals, and packaging prints.

- Such texts are provided with translations matching the translations used in the source code of the software.

- the translation of software related texts may be executed by the additional steps of searching untranslated elements of the software related text, retrieving translation elements for each untranslated text element of the software related text on the basis and in accordance with the association of the retrieved source code translation elements, and associating the untranslated elements of the software related text with the retrieved translation elements.

- the step of retrieving translation elements for each untranslated text element of the software related text on the basis of the association of the retrieved source code translation elements may include previous validation, merging or compilation of the associated source code translation elements, with a preference on the validated entries.

- the method may be executed in a local area network (LAN), wherein different teams of translators access and/or retrieve the translation suggestion from the same table and/or dictionary and/or library and/or storage unit.

- LAN local area network

- the storage unit may be related to a single language software localization (i.e., there is one translation sub-dictionary for each language in the localized software version). This feature may be combined with a composed translation dictionary structure, so that a number of keywords are stored in and retrieved from a localization specific database, by which keywords of minimum requirements are defined. Text elements not containing the keywords may be retrieved from a common database.

- Another exemplary method is provided by which—dependent on or irrespective from the translation of software source code—text elements are translated.

- the method utilizes text elements of the software related text and their respective translations stored in a database.

- Associating the searched untranslated element of the source language with the retrieved translation elements is done, if and after such translation elements are found.

- the method supports a translator by offering one or more possible translations of a source text element.

- the user or translator may easily decide if he wants to accept or discard the suggested translation.

- the main difference with conventional translation programs is that the grammar of the source text element is not checked. This is not necessary, as only the text structure of the source language has to match a previously translated text element. It is similar to using an analphabet (e.g., an illiterate) for comparing a pattern (the source text element) with previously stored patterns, in a card index and attaching a respective file card (with the translation element) at the place where the pattern was found in the source text.

- an analphabet e.g., an illiterate

- a translator may accept or discard the index card, instead of translating each text element by himself, thereby improving his translation speed.

- the performance of the pretranslation is therefore only dependent on the retrieval speed of the file card and on the size of the card index, and not on the linguistic abilities of the user performing the translation.

- two or more users may sweep the card index at the same time. Additionally, in another example, the users can take newly written index cards from another user and sort them into the card index.

- the associating is performed by entering the retrieved translation elements into a table.

- the use of tables simplifies the processing of the text elements and enables the developers to use database management systems (DBMS) to realize the example in a computer.

- DBMS database management systems

- the use of tables further simplifies the access of retrieved translation elements, if they are retrieved from a database.

- the user may accept or discard entries in the pretranslation table by interaction.

- the interaction with a user has various advantages. First, a user may decide for himself if he wants to accept the proposed entry. Second, if multiple entries are proposed the user can select the best one, or may select one of the proposed lower rated translation suggestions and adapt it to the present text to be translated. The user may choose whether he wants to select one of the proposed translation elements in the pre-translation table, or whether he wants to translate the text element manually.

- the translation method may include updating the dictionary database with the validated translation element.

- This extension may be executed automatically (e.g., by automatically updating the dictionary database with the validation of a translation element).

- the updating of the database may be executed according to different procedures. For example, the database update may be executed by transferring all validated translation elements from the user device to the database, so that the database itself may determine whether that validated translation element is already stored, or is to be stored as a new translation element. As another example, the database may be updated by transferring only newly generated database entries to the database.

- a user, a user device, or the database checks whether a validated text element/translation element pair is already present in the database, to prevent a large number of similar or identical entries, which is preferably avoided.

- An additional validation process initiated from a second translator may be performed to update the translation database to prevent invalid translation elements from being dragged into the translation database.

- the second validation may be easily be implemented, as the translator for the second validation has only to determine whether a translation element matches the respective text element or not. It may also be possible to discard certain translation proposals as invalid by a user input, to prevent the system from offering this translation element a second time. This feature may be embodied as a “negative key ID”-entry in a predetermined column of the translation table.

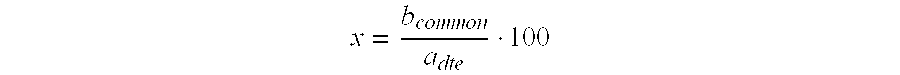

- An index may be generated in an additional step indicating the grade of conformity of an element of the text and of the dictionary database. This index is useful, if the text element to be translated and the text entries stored in the database differ only slightly, so that a translator may easily derive a correct translation from a slightly different database entry.

- the depiction of an index describing the matching of an untranslated text element and a translated text element may be described as fuzzy matches.

- the generated index may be described as a “fuzziness” index of the matching element.

- the number of entries in the pretranslation table of one text element is related to the grade of matching.

- the number of matching translations to be retrieved from the translation database has not been limited in the preceding discussion, so that more than one matching entry of the database may be retrieved. If more than one entry may be found, the number of retrieved entries may be limited to a predetermined value of the matching grade index. Alternatively, the number of retrieved database entries may be limited by a predetermined value. Both limits avoid the possibility that a human translator has to process a large number of translation proposals.

- the number of translation proposals may be limited by a predetermined value (e.g., of the matching index). If the predetermined matching index is set to zero, the user in the above example would retrieve the whole database for each source text element in the table.

- a translation entry of the source language text element entry is accepted as a valid translation automatically, if the index indicates an exact match of the untranslated text element with the source language text element entry.

- This option automates the translation support method described previously to an automatic translator for text elements with exactly matching database entries.

- This automatic translation method may economize the translator, if the texts to be translated are limited to a small lingual field combined with a very large database.

- Very large databases suggest a centralized use of the translation method in computer networks such as local area networks or the internet, thus enabling fast generation of a very large universal translation database, if any net user can access the database for translation tasks improving the database with each accepted translation.

- the entries may be marked as authorized by the translators of the provider.

- the method may include marking translated or untranslated or partially translated text elements.

- the markings may be used to indicate the status of the translation, distinguishing between different grades of translations (e.g., fully, partially, or untranslated).

- the markings may be incorporated in the text, or may be added in a separate column into the translation table.

- the markings may be used to indicate whether the specific table entry has been translated by a translator, or is an automatically generated proposal.